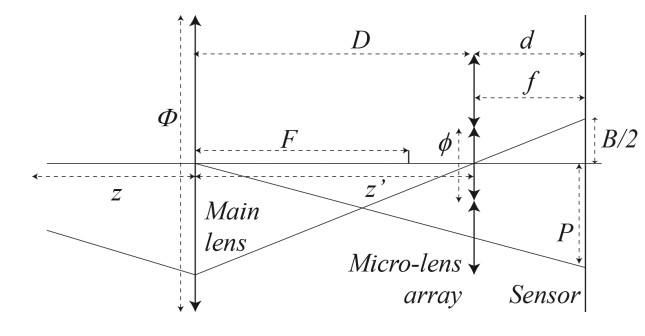

In this paper we study the light field sampling produced by ideal plenoptic sensors, an emerging technology providing new optical capabilities. In particular, we leverage its potential with a new optical design that couples a pyramid lens with an ideal plenoptic sensor. The main advantage is that it extends the field-of-view (FOV) of a main-lens without changing its focal length. To evince the utility of the proposed design we have performed different experiments. First, we demonstrate on

simulated synthetic images that our optical design effectively doubles the FOV. Then, we show its feasibility with two different prototypes using plenoptic cameras on the market with very different plenoptic samplings, namely a Raytrix R5 and a Canon 5D MarkIV. Arguably, future cameras with ideal plenoptic sensors will be able to be coupled with pyramid lenses to extend its inherent FOV in a single snapshot.

“Plenoptic Sensor : Application to Extend Field-of-View“, B. Vandame, V. Drazic, M. Hog, N. Sabater, 26th European Signal Processing Conference (EUSIPCO), Rome Sept. 3-7, 2018

Skip to PDF content

Skip to PDF content

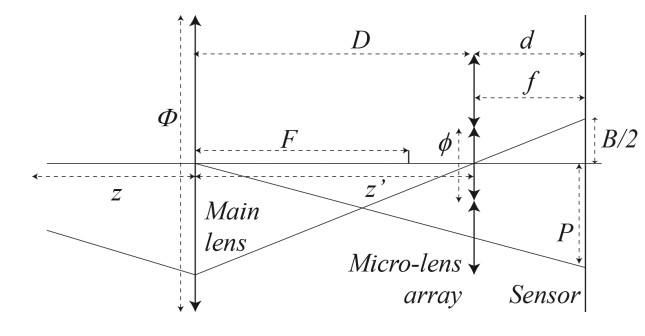

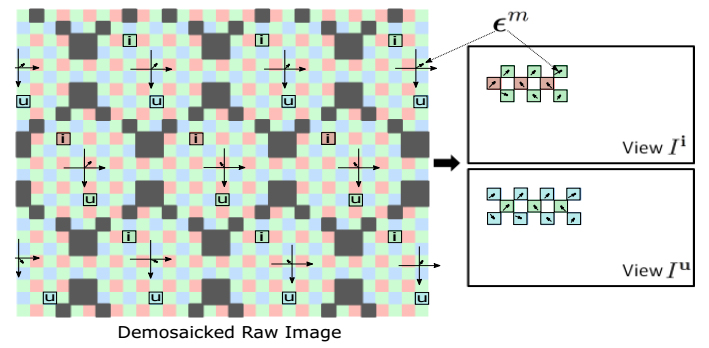

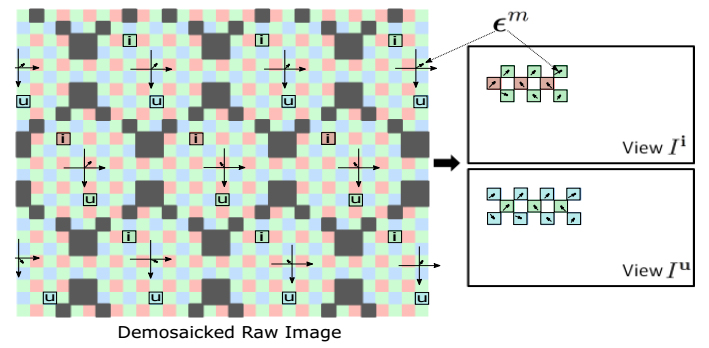

Light field imaging is recently made available to the mass market by Lytro and Raytrix commercial cameras. Thanks to a grid of microlenses put in front of the sensor, a plenoptic camera simultaneously captures several images of the scene under different viewing angles, providing an enormous advantage for post-capture applications, e.g., depth estimation and image refocusing. In this paper, we propose a fast framework to re-grid, denoise and up-sample the data of any plenoptic

camera. The proposed method relies on the prior sub-pixel estimation of micro-images centers and of inter-views disparities. Both objective and subjective experiments show the improved quality of our results in terms of preserving high frequencies and reducing noise and artifacts in low frequency content. Since the recovery of the pixels is independent of one another, the algorithm is highly parallelizable on GPU.

“On plenoptic sub-aperture view recovery“, Mozhdeh Seifi, Neus Sabater, Valter Drazic, Patrick Pérez, 24th European Signal Processing Conference (EUSIPCO), 29 Aug.-2 Sept. 2016

Skip to PDF content

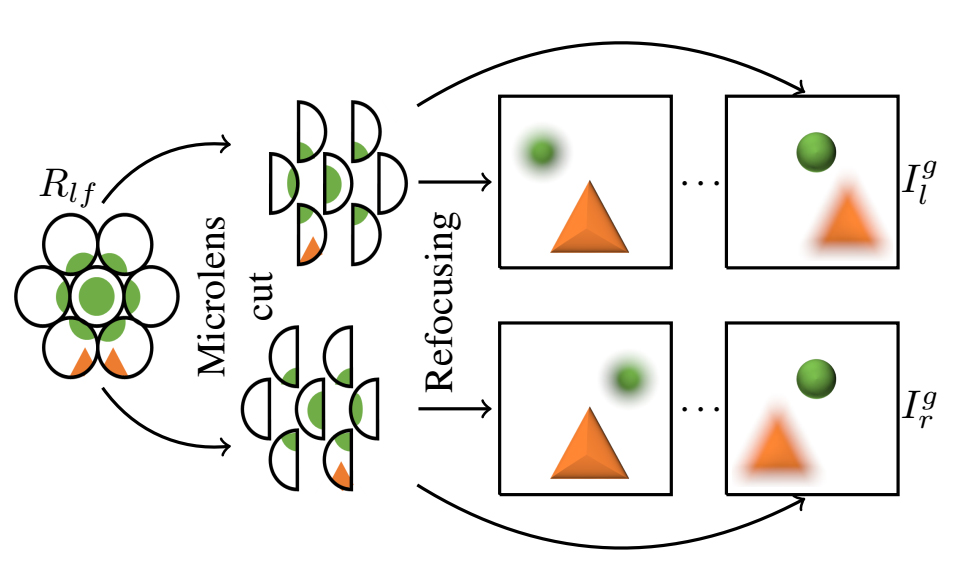

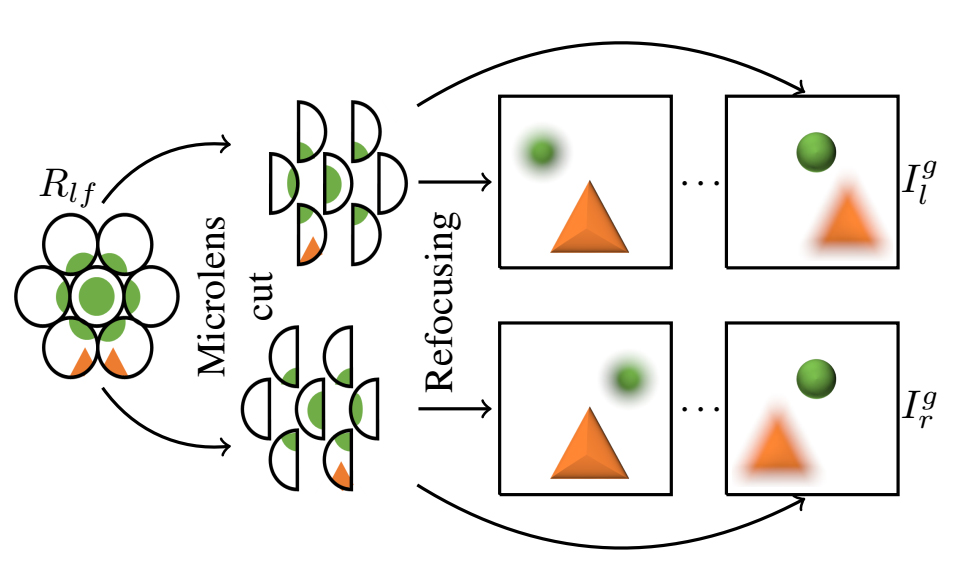

In this paper, we present a complete processing pipeline for focused plenoptic cameras. In particular, we propose (i) a new algorithm for microlens center calibration fully in the

Fourier domain, (ii) a novel algorithm for depth map computation using a stereo focal stack and (iii) a depth-based rendering algorithm that is able to refocus at a particular depth or to create all-in-focus images. The proposed algorithms are fast, accurate and do not need to generate Subaperture Images (SAIs) or Epipolar Plane Images (EPIs) which is capital for focused plenoptic cameras. Also, the resolution of the resulting depth map is the same as the rendered image. We show results of our

pipeline on the Georgiev’s dataset and real images captured with different Raytrix cameras.

“An Image Rendering Pipeline for Focused Plenoptic Cameras“, M. Hog, N. Sabater, B. Vandame, V. Drazic, IEEE Transactions on Computational Imaging, Vol. 14, No 8, August 2015.

Skip to PDF content