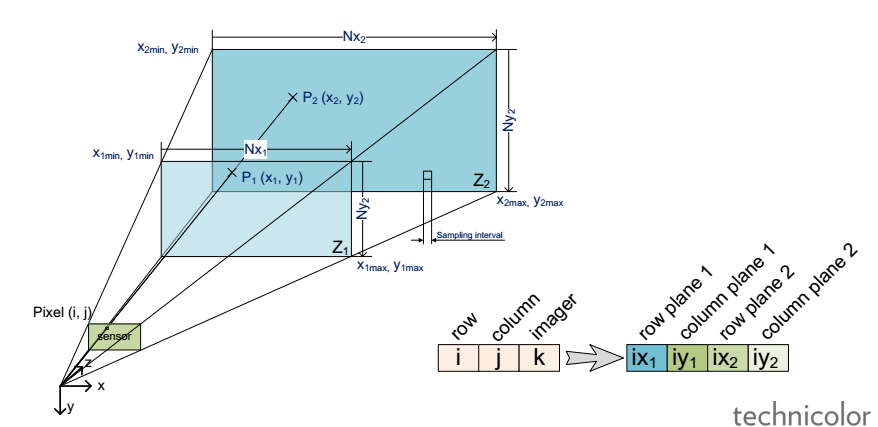

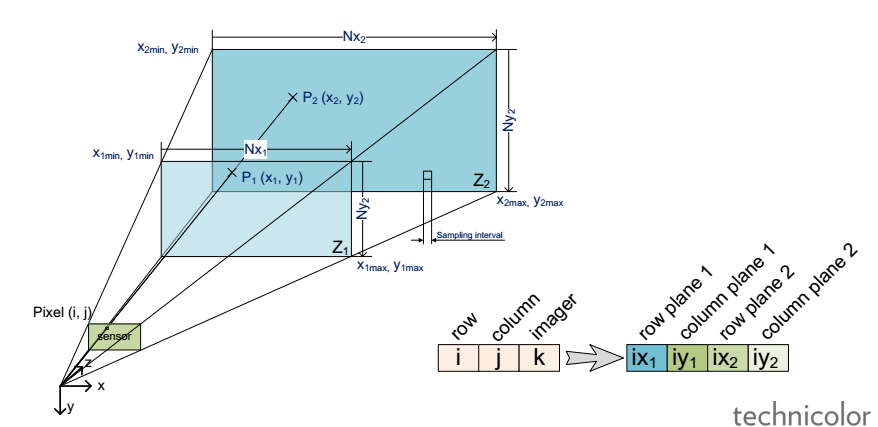

Light-field (LF) is foreseen as an enabler for the next generation of 3D/AR/VR experiences. However, lack of unified representation, storage and processing formats, variant LF acquisition systems and capture-specific LF processing algorithms prevent cross-platform approaches and constrain the advancement and standardization process of the LF information. In this work we present our vision for camera-agnostic format and processing of LF data, aiming at a common ground for LF data storage, communication and processing. As a proof-of-concept for camera-agnostic pipeline, we present a new and efficient LF storage format (for 4D rays) and demonstrate feasibility of camera-agnostic LF processing. To do so, we implement a camera-agnostic depth extraction method. We use LF data from a camera-rig acquisition setup and several synthetic inputs including plenoptic and non-plenoptic captures, to emphasize the camera-agnostic nature of the proposed LF storage and processing pipeline.

“Camera-agnostic format and processing for light-field data“, Mitra Damghanian, Paul Kerbiriou, Valter Drazic, Didier Doyen, Laurent Blondé, 2017 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), 10-14 July 2017

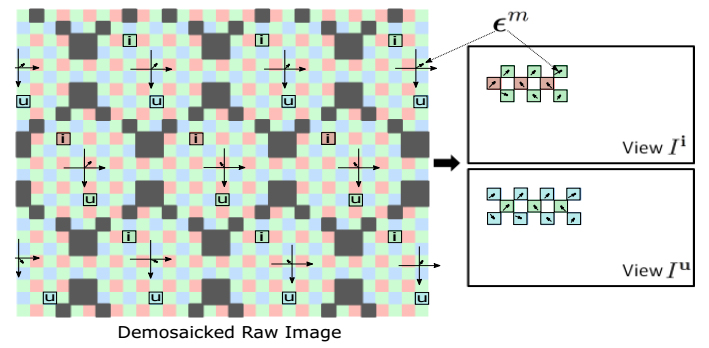

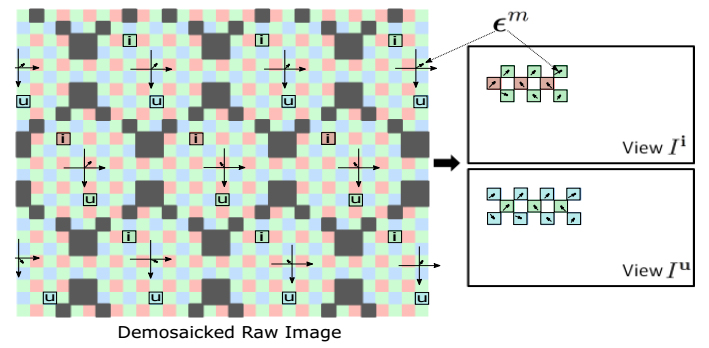

Light field imaging is recently made available to the mass market by Lytro and Raytrix commercial cameras. Thanks to a grid of microlenses put in front of the sensor, a plenoptic camera simultaneously captures several images of the scene under different viewing angles, providing an enormous advantage for post-capture applications, e.g., depth estimation and image refocusing. In this paper, we propose a fast framework to re-grid, denoise and up-sample the data of any plenoptic

camera. The proposed method relies on the prior sub-pixel estimation of micro-images centers and of inter-views disparities. Both objective and subjective experiments show the improved quality of our results in terms of preserving high frequencies and reducing noise and artifacts in low frequency content. Since the recovery of the pixels is independent of one another, the algorithm is highly parallelizable on GPU.

“On plenoptic sub-aperture view recovery“, Mozhdeh Seifi, Neus Sabater, Valter Drazic, Patrick Pérez, 24th European Signal Processing Conference (EUSIPCO), 29 Aug.-2 Sept. 2016

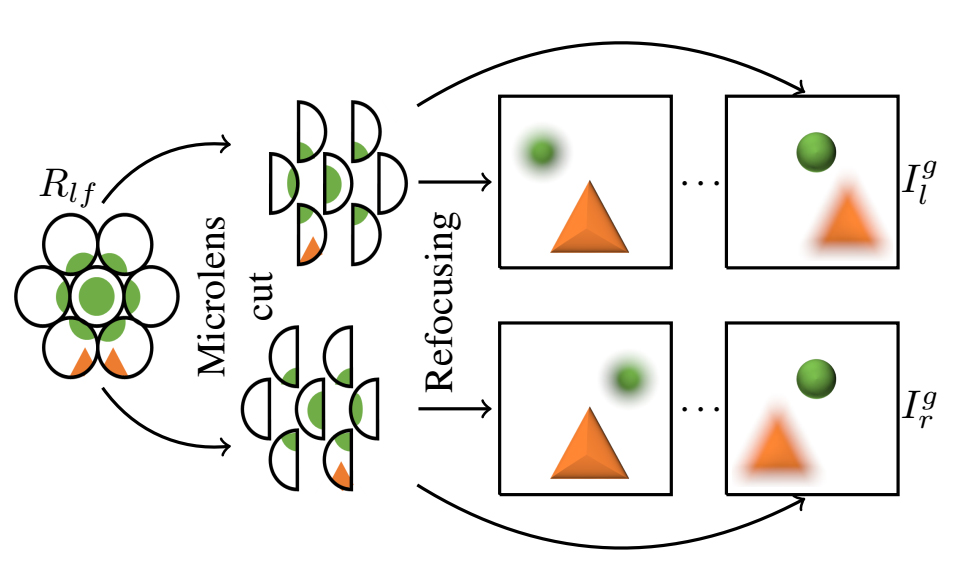

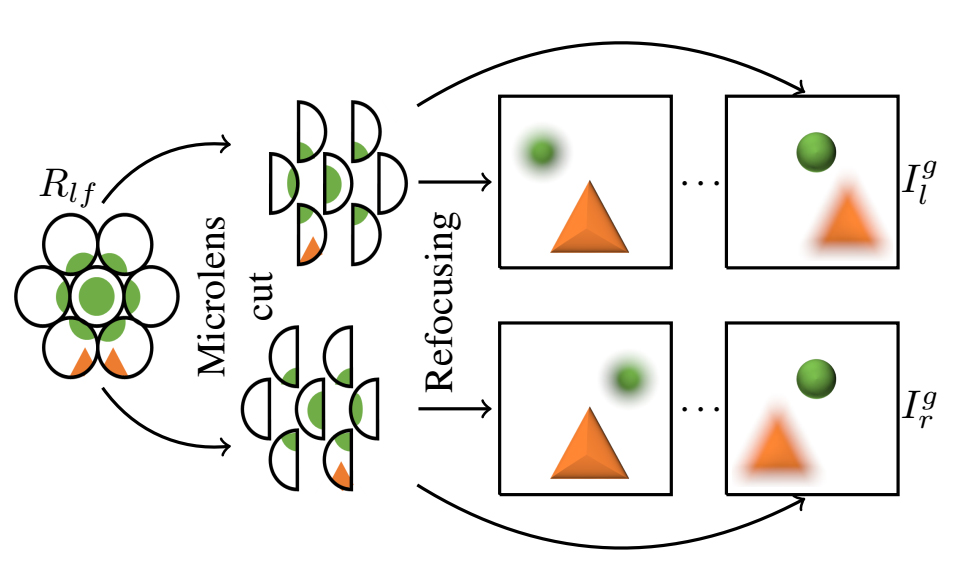

In this paper, we present a complete processing pipeline for focused plenoptic cameras. In particular, we propose (i) a new algorithm for microlens center calibration fully in the

Fourier domain, (ii) a novel algorithm for depth map computation using a stereo focal stack and (iii) a depth-based rendering algorithm that is able to refocus at a particular depth or to create all-in-focus images. The proposed algorithms are fast, accurate and do not need to generate Subaperture Images (SAIs) or Epipolar Plane Images (EPIs) which is capital for focused plenoptic cameras. Also, the resolution of the resulting depth map is the same as the rendered image. We show results of our

pipeline on the Georgiev’s dataset and real images captured with different Raytrix cameras.

“An Image Rendering Pipeline for Focused Plenoptic Cameras“, M. Hog, N. Sabater, B. Vandame, V. Drazic, IEEE Transactions on Computational Imaging, Vol. 14, No 8, August 2015.

Generating depth maps along with video streams is valuable for Cinema and Television production. Thanks to the improvements of depth acquisition systems, the challenge of fusion between depth sensing and disparity estimation is widely investigated in computer vision. This paper presents a new framework for generating depth maps from a rig made of a professional camera with two satellite cameras and a Kinect device. A new disparity-based calibration method is proposed so that registered Kinect depth samples become perfectly consistent with disparities estimated between rectified views. Also, a new hierarchical fusion approach is proposed for combining on the flow depth sensing and disparity estimation in order to circumvent their respective weaknesses. Depth is determined by minimizing a global energy criterion that takes into account the matching reliability and the consistency with the Kinect input. Thus generated depth maps are relevant both in uniform and textured areas, without holes due to occlusions or structured light shadows. Our GPU implementation reaches 20fps for generating quarter-pel accurate HD720p depth maps along with main view, which is close to real-time performances for video applications. The estimated depth is high quality and suitable for 3D reconstruction or virtual view synthesis.

“Fusion of Kinect depth data with trifocal disparity estimation for near real-time high quality depth maps generation“, G. Boisson, P. Kerbiriou, V. Drazic, O. Bureller, N. Sabater, A. Schubert. Proc. SPIE 9011, Stereoscopic Displays and Applications XXV, 90110J. IS&T/SPIE Electronic Imaging, 2014.

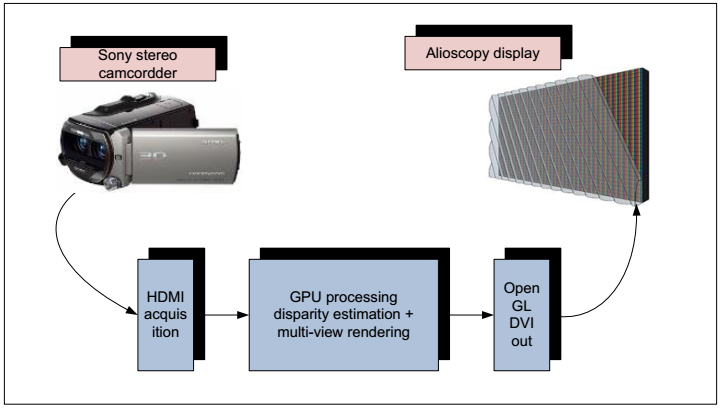

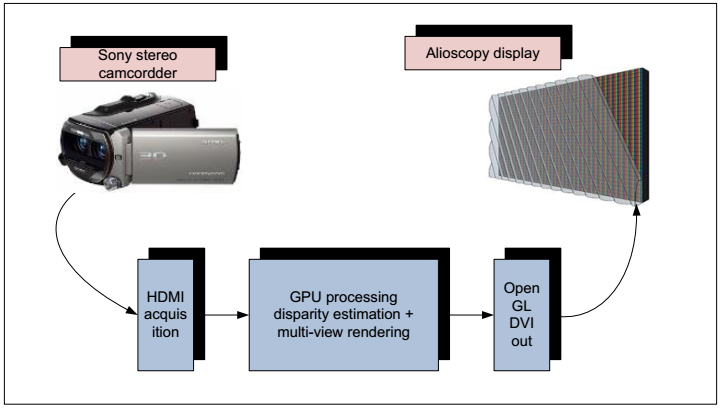

As often declared by customers, wearing glasses is a clear limiting factor for 3D adoption in the home. Auto-stereoscopic systems bring an interesting answer to this issue. These systems are evolving very fast providing improved picture quality. Nevertheless, they require adapted content which are today highly demanding in term of computation power. The system we describe here is able to generate in real-time adapted content to these displays: from a real-time stereo capture up to a real-time multi-view rendering. A GPU-based solution is proposed that ensures a real-time processing of both disparity estimation and multi-view rendering on the same platform.

“A real-time 3D multi-view rendering from a real-time 3D capture”, D. Doyen, S. Thiebaud, V. Drazic, C. Thébault. Vol. 44, N°. 1 July, 578-581, SID 2013

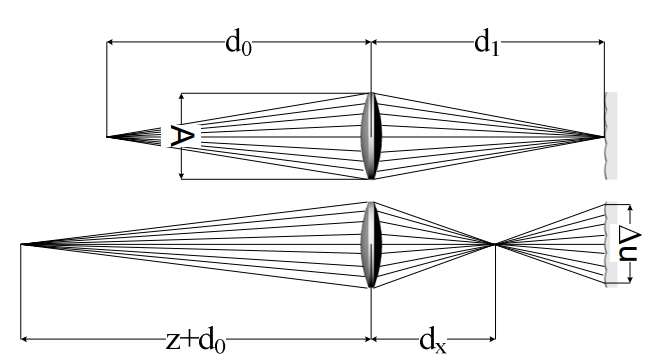

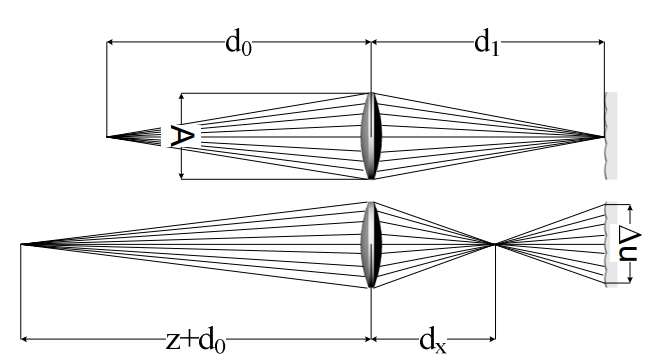

Plenoptic cameras1are able to acquire multi-view content that can be displayed on auto-stereoscopic displays. Depth maps can be generated from the set of multiple views. As it is a single lens system, very often the question arises whether this system is suitable for 3D or depth measurement. The underlying thought is that the precision with which it is able to generate depth maps is limited by the aperture size of the main lens. In this paper, we will explore the depth discrimination capabilities of plenoptic cameras. A simple formula quantifying the depth resolution will be

given and used to drive the principal design choices for a good depth measuring single lens system.

“Optimal Depth Resolution in Plenoptic Imaging“, V. Drazic, IEEE Internacional Conference on Multimedia and Expo, 19-23 July 2010, Singapore (ICMA 2010)