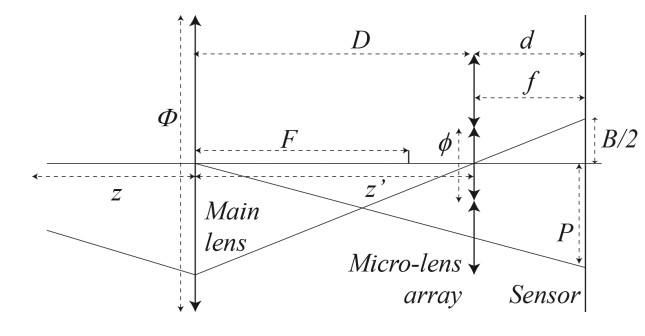

In this paper we study the light field sampling produced by ideal plenoptic sensors, an emerging technology providing new optical capabilities. In particular, we leverage its potential with a new optical design that couples a pyramid lens with an ideal plenoptic sensor. The main advantage is that it extends the field-of-view (FOV) of a main-lens without changing its focal length. To evince the utility of the proposed design we have performed different experiments. First, we demonstrate on

simulated synthetic images that our optical design effectively doubles the FOV. Then, we show its feasibility with two different prototypes using plenoptic cameras on the market with very different plenoptic samplings, namely a Raytrix R5 and a Canon 5D MarkIV. Arguably, future cameras with ideal plenoptic sensors will be able to be coupled with pyramid lenses to extend its inherent FOV in a single snapshot.

“Plenoptic Sensor : Application to Extend Field-of-View“, B. Vandame, V. Drazic, M. Hog, N. Sabater, 26th European Signal Processing Conference (EUSIPCO), Rome Sept. 3-7, 2018

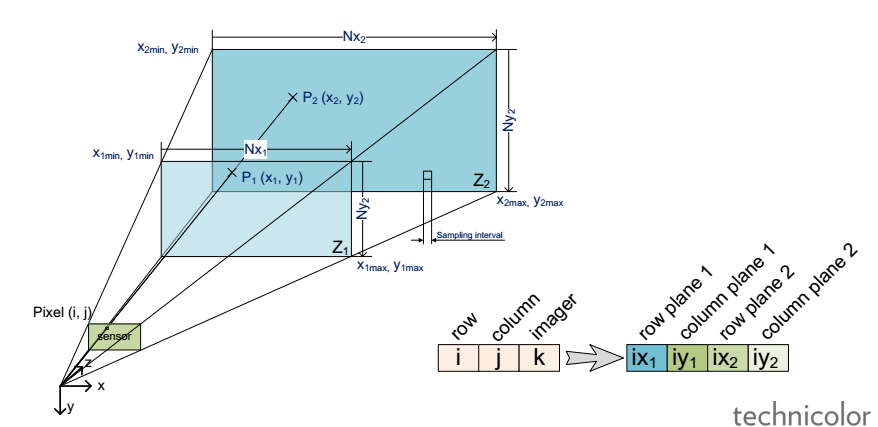

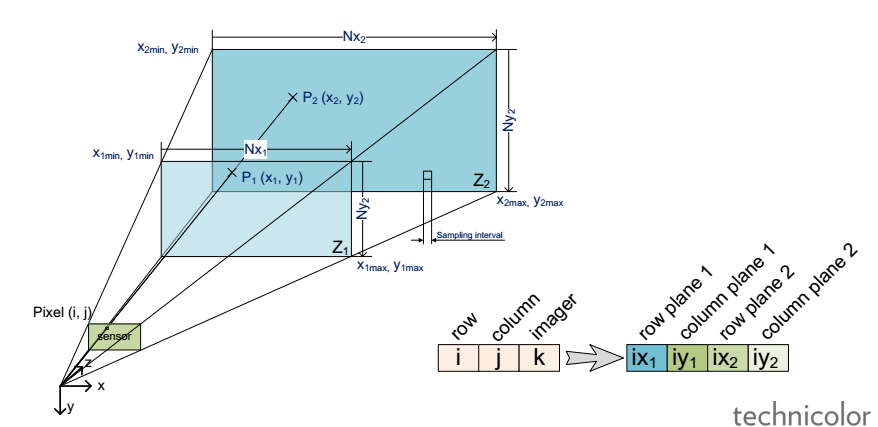

Light-field (LF) is foreseen as an enabler for the next generation of 3D/AR/VR experiences. However, lack of unified representation, storage and processing formats, variant LF acquisition systems and capture-specific LF processing algorithms prevent cross-platform approaches and constrain the advancement and standardization process of the LF information. In this work we present our vision for camera-agnostic format and processing of LF data, aiming at a common ground for LF data storage, communication and processing. As a proof-of-concept for camera-agnostic pipeline, we present a new and efficient LF storage format (for 4D rays) and demonstrate feasibility of camera-agnostic LF processing. To do so, we implement a camera-agnostic depth extraction method. We use LF data from a camera-rig acquisition setup and several synthetic inputs including plenoptic and non-plenoptic captures, to emphasize the camera-agnostic nature of the proposed LF storage and processing pipeline.

“Camera-agnostic format and processing for light-field data“, Mitra Damghanian, Paul Kerbiriou, Valter Drazic, Didier Doyen, Laurent Blondé, 2017 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), 10-14 July 2017

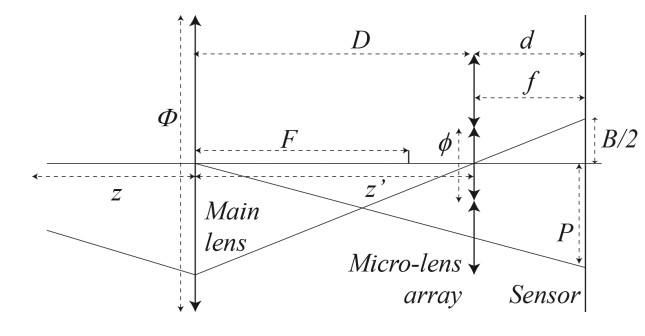

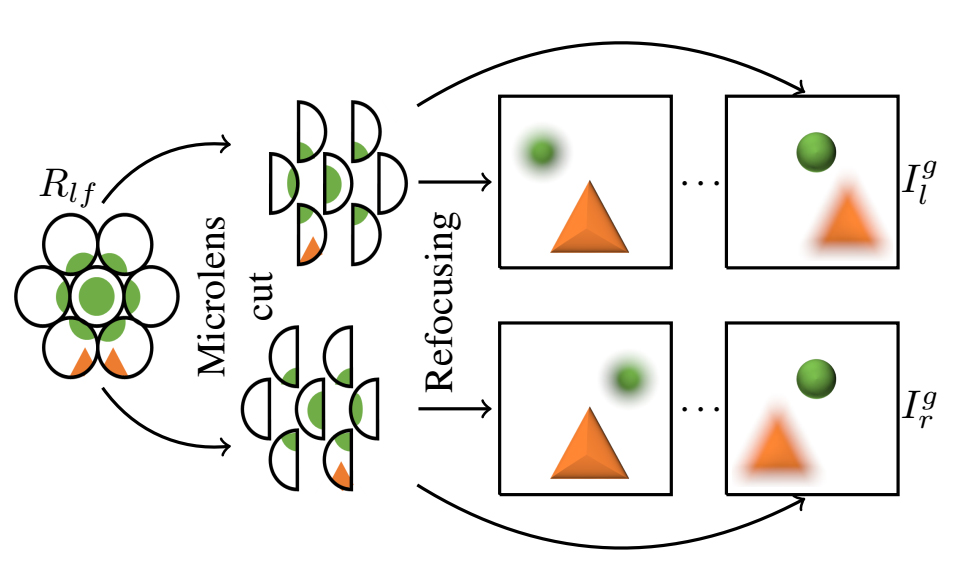

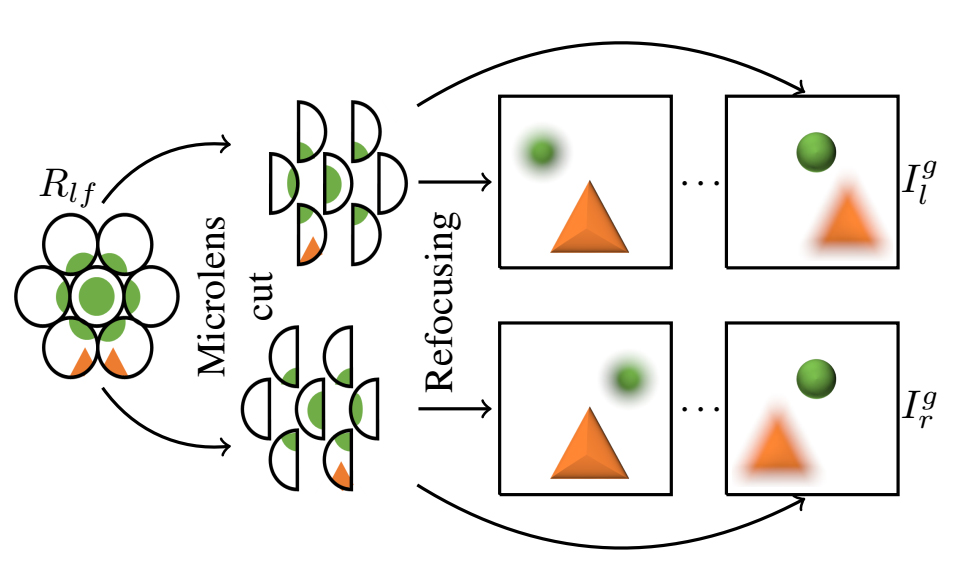

In this paper, we present a complete processing pipeline for focused plenoptic cameras. In particular, we propose (i) a new algorithm for microlens center calibration fully in the

Fourier domain, (ii) a novel algorithm for depth map computation using a stereo focal stack and (iii) a depth-based rendering algorithm that is able to refocus at a particular depth or to create all-in-focus images. The proposed algorithms are fast, accurate and do not need to generate Subaperture Images (SAIs) or Epipolar Plane Images (EPIs) which is capital for focused plenoptic cameras. Also, the resolution of the resulting depth map is the same as the rendered image. We show results of our

pipeline on the Georgiev’s dataset and real images captured with different Raytrix cameras.

“An Image Rendering Pipeline for Focused Plenoptic Cameras“, M. Hog, N. Sabater, B. Vandame, V. Drazic, IEEE Transactions on Computational Imaging, Vol. 14, No 8, August 2015.

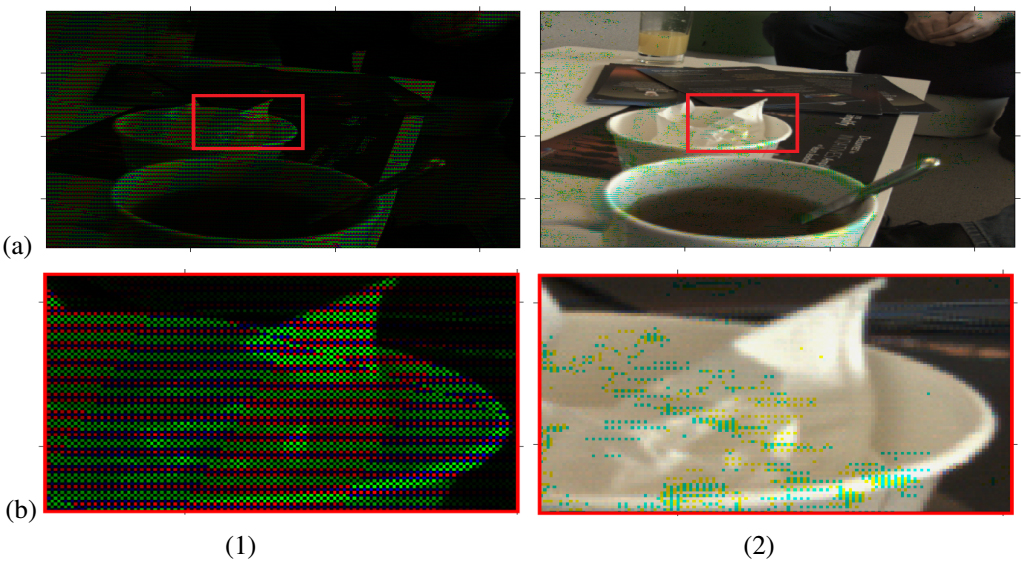

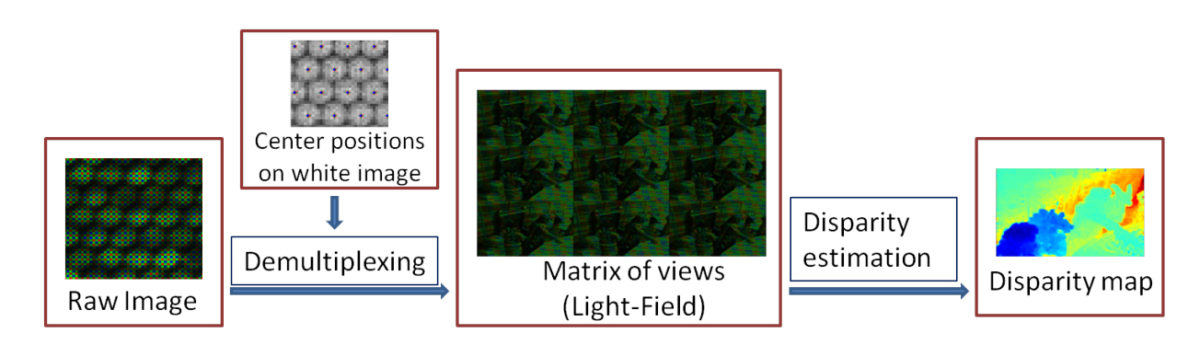

Light-field imaging has been recently introduced to mass market by the hand held plenoptic camera Lytro. Thanks to a microlens array placed between the main lens and the sensor, the captured data contains different views of the scene from different view points. This offers several post-capture applications, e.g., computationally changing the main lens focus. The raw data conversion in such cameras is however barely studied in the literature. The goal of this paper is to study the particularly overlooked problem of demosaicking the views for plenoptic cameras such as Lytro. We exploit the redundant sampling of scene content in the views, and show that disparities estimated from the mosaicked data can guide the demosaicking, resulting in minimum artifacts compared to the state of art methods. Besides, by properly addressing the view demultiplexing step, we take the first step towards light field super-resolution with negligible computational overload.

“Disparity-guided demosaicking of light field images“, M. Seifi, N. Sabater, V. Drazic, P. Perez, IEEE International Conference on Image Processing (ICIP), 2014

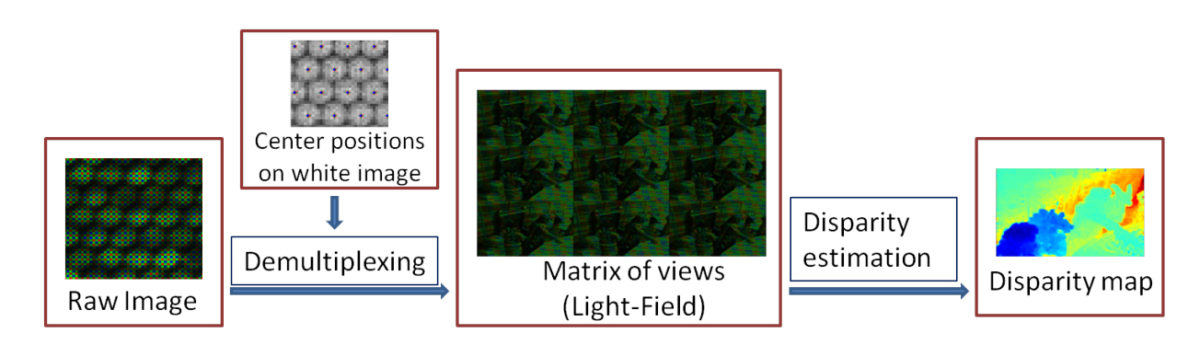

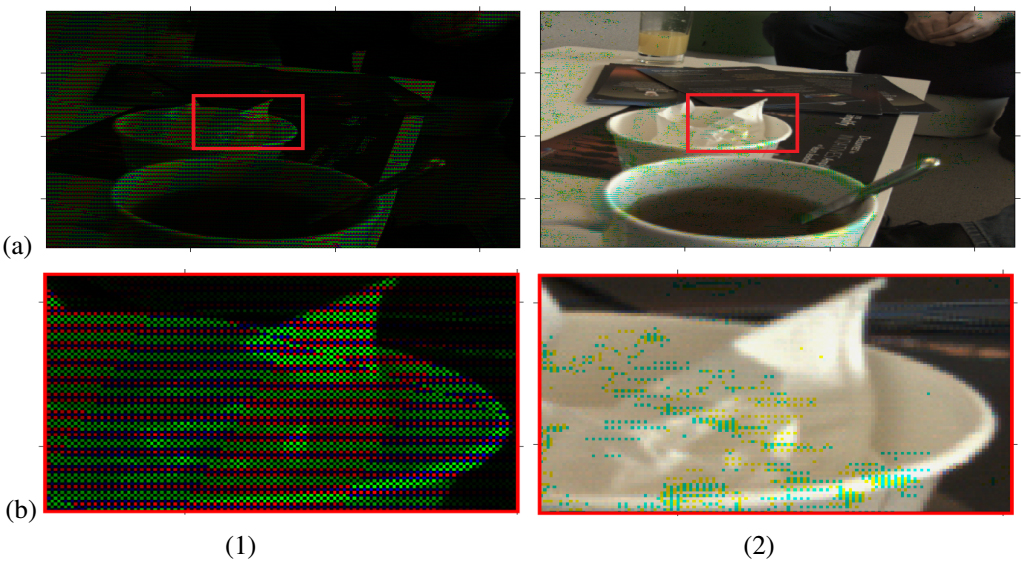

In this paper we propose a post-processing pipeline to recover accurately the views (light-field) from the raw data of a plenoptic camera such as Lytro and to estimate disparity maps in a novel way from such a light-field. First, the microlens centers are estimated and then the raw image is demultiplexed without demosaicking it beforehand. Then, we present a new block-matching algorithm to estimate disparities for the mosaicked plenoptic views. Our algorithm exploits at best the configuration given by the plenoptic camera: (i) the views are horizontally and vertically rectified and have the same baseline, and therefore (ii) at each point, the vertical and horizontal disparities are the same. Our strategy of demultiplexing without demosaicking avoids image artifacts due to view cross-talk and helps estimating more accurate disparity maps. Finally, we compare our results with state-of-the-art methods.

“Accurate Disparity Estimation for Plenoptic Images“, N. Sabater, M. Seifi, V. Drazic, G. Sandri, P. Perez, European Conference on Computer Vision (ECCV) 2014 Workshops.