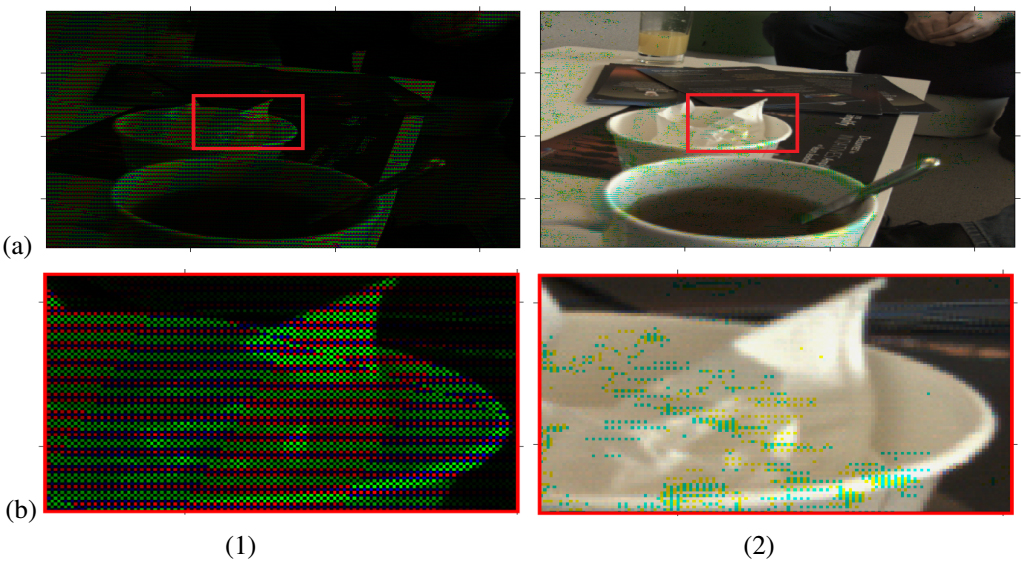

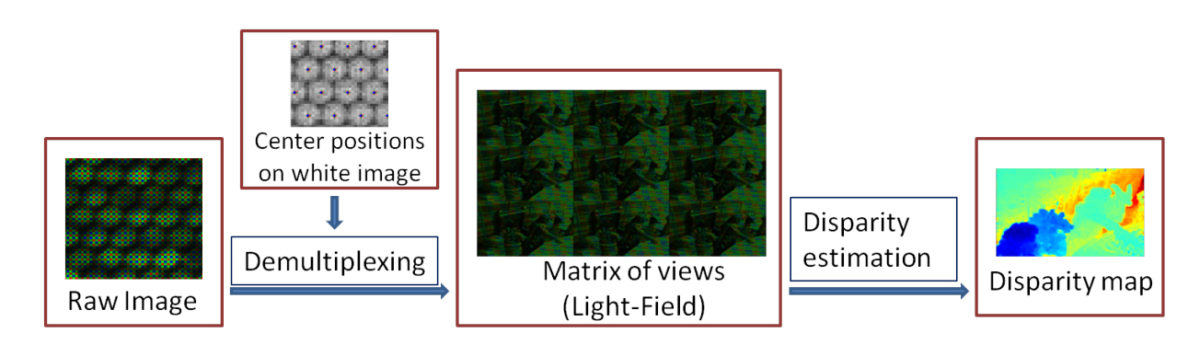

Light-field imaging has been recently introduced to mass market by the hand held plenoptic camera Lytro. Thanks to a microlens array placed between the main lens and the sensor, the captured data contains different views of the scene from different view points. This offers several post-capture applications, e.g., computationally changing the main lens focus. The raw data conversion in such cameras is however barely studied in the literature. The goal of this paper is to study the particularly overlooked problem of demosaicking the views for plenoptic cameras such as Lytro. We exploit the redundant sampling of scene content in the views, and show that disparities estimated from the mosaicked data can guide the demosaicking, resulting in minimum artifacts compared to the state of art methods. Besides, by properly addressing the view demultiplexing step, we take the first step towards light field super-resolution with negligible computational overload.

“Disparity-guided demosaicking of light field images“, M. Seifi, N. Sabater, V. Drazic, P. Perez, IEEE International Conference on Image Processing (ICIP), 2014